WatchSleepNet: A Novel Model and Pretraining Approach for Advancing Sleep Staging with Smartwatches

Proceedings of Machine Learning Research

Will Ke Wang, Bill Chen, Jiamu Yang, Hayoung Jeong, Leeor Hershkovich, Shekh Md Mahmudul Islam, Mengde Liu, Ali R Roghanizad, Md Mobashir Hasan Shandhi, Andrew R Spector, Jessilyn Dunn

Summary

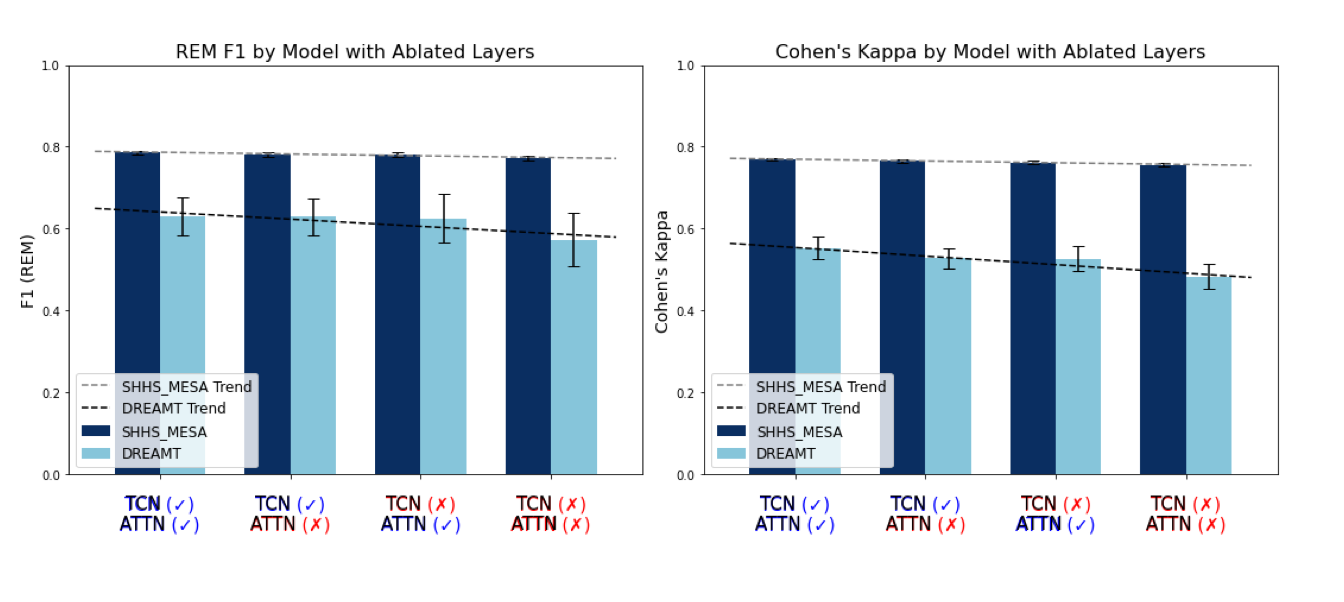

Sleep monitoring is essential for assessing overall health and managing sleep disorders, yet clinical adoption of consumer wearables remains limited due to inconsistent performance and scarce open source datasets and transparent codebase. In this study, we introduce WatchSleepNet, a novel, open-source three-stage sleep staging algorithm. The model uses sequence-to-sequence architecture integrating Residual Networks (ResNet), Temporal Convolutional Networks (TCN), and Long Short-Term Memory (LSTM) networks with self-attention to effectively capture both spatial and temporal dependencies crucial for sleep staging. To address the limited availability of high-quality wearable photoplethysmography (PPG) datasets, WatchSleepNet leveraged inter-beat interval (IBI) signals as a shared representation across polysomnography (PSG) and photoplethysmography (PPG) modalities. By pre-training on large PSG datasets and fine-tuning on wrist-worn PPG signals, the model achieved a REM F1 score of 0.631 ± 0.046 and a Cohen’s Kappa of 0.554 ± 0.027, surpassing previous state-of-the-art methods. To promote transparency and further research, we publicly release our model and codebase, advancing reproducibility and accessibility in wearable sleep research and enabling the development for more robust, clinically viable sleep monitoring solutions.

Citation

Wang, Will Ke, et al. “WatchSleepNet: A Novel Model and Pretraining Approach for Advancing Sleep Staging with Smartwatches.” Proceedings of Machine Learning Research 287 (2025): 1-20.